Your domains are the entry point to your online services, so their reliability and performance are vital for success. With secondary DNS, you can add redundancy to your name servers while minimizing domain resolution latency. Whether you’re a product manager wanting to refine the first stages of a sales funnel or an engineer needing to achieve a service level objective, secondary DNS can help you to achieve your business goals. This article will explain what secondary DNS is, how it fits into the DNS design, and what benefits you get from adding it to your architecture.

What Is Secondary DNS?

A secondary DNS server is a type of DNS server that automatically stores copies of all the DNS records from a primary DNS server. If your primary server cannot be reached or is busy, the secondary DNS server steps in to handle requests. This adds redundancy and ensures the continuous availability of your DNS services, safeguarding against potential disruptions in network services.

How Does DNS Work?

To better understand secondary DNS, let’s make a quick excursion to the basics of DNS. This part is necessary to understand where secondary name servers fit into the overall picture.

DNS is short for domain name service. DNS is a distributed system that resolves domain names into IP addresses, other domain names, or arbitrary text. It adds a layer of indirection between the actual addresses of each server on the Internet and the clients that want to access them. It’s an essential part of the internet. Without it, you would have to remember long numbers like 192.168.43.10 or 2001:db8::ff00:42:8329 and update them everywhere when they change.

How Does Name Resolution Work?

The name resolution happens via simple lookup tables called zone files filled with RRs. Each of these RRs contains the following fields:

- The name field contains a fully qualified domain name, which forms the key of the zone files.

- The type field contains the record type. Important types are:

- SOA for administrative data, e.g., the zone file version

- A and AAAA for IP addresses

- CNAME for aliasing other domain names

- MX for mail server addresses or domain names

- TXT for arbitrary text

- NS for addresses or domain names of authoritative name servers; this is also the record you can check for secondary name servers

- The data field contains data like an IP address, another domain, or text. It forms the value of the zone files.

- The time to live (TTL) contains the time a client can cache the resolved data locally.

- The class field contains a protocol class. On the internet, its value is always IN.

Look at the following zone file for a fictional example.com zone with multiple NS records. Explanations are on the left, and the records are on the right and below.

$TTL 86400example.com. IN SOA ns1.example.com. hostmaster.example.com. ( 2023061901 ; serial number YYYYMMDDnn 3600 ; refresh every 1 hours 1800 ; retry every 30 minutes 604800 ; expire after 1 week 86400 ) ; minimum TTL of 1 dayexample.com. IN NS ns1.example.com. ; could be a primary serverexample.com. IN NS ns2.example.com. ; could be a secondary serverexample.com. IN MX 10 mail.example.com.example.com. IN A 192.0.2.1ns1.example.com. IN A 192.0.2.2ns2.example.com. IN A 192.0.2.3mail.example.com. IN A 192.0.2.4hello.example.com. IN TXT "Hello, world!"The first line defines the default TTL, which a name server automatically applies to each RR record that doesn’t have its own TTL defined.

The first RR is the SOA record. It’s mandatory and includes administrative information like a name server, the email address (written with a dot instead of an @ symbol) of the responsible domain admin, a version number of the zone file, and timings for caching.

The two NS records define the authoritative name servers for this zone. Here, we have two that also use domains from within the zone. ns1.example.com is the primary name server, ns2.example.com the secondary. This is where you would add your secondary name servers so clients can find them.

The MX record defines the email server for this zone.

The A records then map the domains of the name and email servers to IP addresses, which clients can use to connect to servers. It also includes an apex domain, which maps the bare example.com domain to an IP address.

The final TXT record resolves to the string Hello, world! when queried.

How Do Secondary Servers Relate to Primary (Authoritative) Servers?

Adding the address of a secondary name server to an NS record turns it into an authoritative name server for a zone; it becomes part of the global DNS hierarchy. An authoritative name server is any server with its address mentioned in an NS record for a zone and usually holds all the DNS records for its designated zone. Both primary and secondary servers can be authoritative for a zone.

Adding NS records for secondary servers is crucial because clients only know authoritative servers, they normally don’t know about the concept of primary and secondary name servers. If you add a secondary server to a primary one, but the secondary server’s address isn’t added as an NS record, the clients can’t find it.

Recursive Name Servers

The counterpart of an authoritative name server is a recursive name server, which isn’t responsible for a zone. Recursive servers relay queries to other name servers for resolution and may cache the results for performance reasons. Since they don’t have zone files that need synchronization, they can’t be secondary name servers.

The DNS Resolution Process

DNS is a distributed system, meaning that no single server is responsible for all domains. Instead, the domain space consists of multiple zones that form a tree structure. Each zone contains one or more domain names and one or more name servers responsible for it. If it isn’t responsible for a (sub)domain, it will contain a RR in its zone file that indicates another server is responsible for that domain. The name servers responsible for a zone are called the zone’s authoritative name servers. We’ve written an article that explains DNS zones in more detail; check it out if you want to learn more about zones.

The process of adding a new RR to the zone file and resolving it for a client is illustrated in Figure 2.

- A domain admin adds a new RR to the primary (authoritative) name server, for example, an A-record for the domain example.com.

- The secondary name servers either poll the primary servers for updates or are notified and download the updates via AXFR or IXFR.

- Secondary servers that can’t reach the primary server can receive updates from other secondary servers that are authoritative for their zone.

- An application—such as a browser—sends a query for the resolution of the example.com domain to the local resolver.

- The local resolver relays the query to a recursive name server (RNS) that relays them to authoritative servers which hold the zone files with the RRs.

- The RNS queries a root server, which only holds NS records for top-level domain (TLD) name servers. It returns the NS records for name servers authoritative for the com domain.

- The RNS queries the TLD server, which only holds NS records for the domains under the com TLD. It returns the NS records for the authoritative name servers of the example.com domain.

- The RNS queries one of the name servers responsible for example.com, in this case, a secondary name server. The server is chosen by round-robin. This server returns the data of the A or AAAA record for example.com. The RNS returns the data to the resolver, and the resolver returns it to the application.

How Do Secondary Name Servers Synchronize with a Primary Name Server?

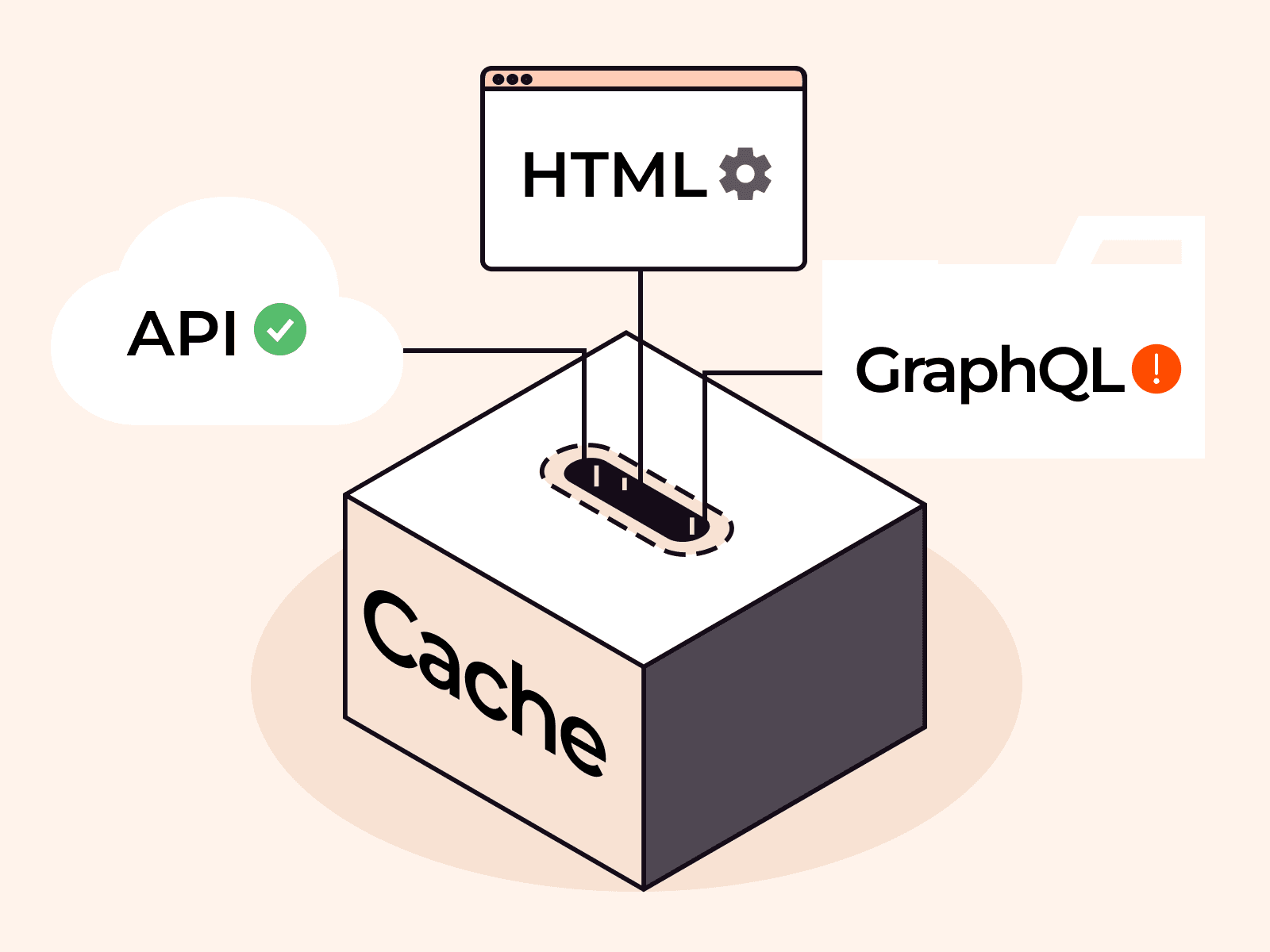

The mechanism to keep name servers in sync is called a zone transfer. The secondary servers either poll other servers in their zone for updates or get notified by their primary server. Both polling and notification rely on the version number of a zone file.

Suppose the secondary name server sees the version has changed. In such a case, it will initialize a zone transfer with either the DNS zone transfer protocol (AXFR) or the incremental zone transfer protocol (IXFR) to fetch the latest RRs from the primary server or other secondary servers that are more up to date.

It is also possible to have multiple primary name servers, where each of their zone files are synchronized manually. In the context of secondary DNS, “manually” means the servers don’t synchronize via DNS-specific mechanisms. It’s possible to synchronize them by other automatic means, like Terraform scripts. To do so, a domain admin would update the zone file definition in the script, and Terraform would apply it to multiple primary name servers on a redeploy.

What Are the Benefits of Secondary DNS?

Now that we understand the zone transfer mechanism, let’s look at the benefits of secondary DNS beyond just automated synchronization.

Improved DNS Redundancy and Resiliency

Adding secondary name servers located in different data centers improves DNS redundancy. If one name server crashes or isn’t available for other reasons, clients can still use the remaining servers to resolve domain names.

Secondary name servers also improve DNS resiliency because they don’t just synchronize with the primary server but also with the other secondary servers in their zone. This way, updated RRs can still propagate to a secondary server that can’t connect to the primary server.

If a primary server is authoritative, it has to resolve queries and send updates to the secondary servers. Resolving client queries usually has the highest priority, so if the primary server load is too high, a secondary might get a notification but can initialize a zone transfer. If the zone transfer times out, the secondary server can ask other secondaries for the update and lower the load on the primary.

Low DNS Latency

If you branch your operations out to another continent, you can improve latency by deploying a secondary name server in that new location. If users are geographically dispersed, you can spread your secondary name servers all over the globe so that each user can use one close to them. With only a primary server, you must decide on one location that might deliver low latency resolution to only some of your users, and fail to provide low latency for others.

Clients select name servers via NS records and use round-robin to choose a new server for every subsequent query. This mechanism doesn’t help a client to find the server with the lowest latency, but you can add multiple servers to one NS record by giving them an Anycast IP address. Anycast routes a request to all servers that share an IP address but only returns the response that came back first to the client. This way, the clients always get the server with the lowest latency.

While Anycast isn’t directly related to DNS, it works hand in hand with the zone transfer mechanism. The secondary name servers keep each other synchronized with the primary server, and the Anycast protocol assigns each client the fastest server.

DNS Load Balancing

A zone file can have NS records for multiple name servers. The domain resolution process chooses one of these servers using a round-robin algorithm. In this algorithm, the client remembers which name server it already used and selects another one for the next query. This way, each subsequent query hits another server that can handle it while the previous one is still busy.

If your user base grows, your infrastructure must also support the additional load. The mantra of cloud is horizontal scaling, meaning that if the load rises, new servers must be added. So secondary DNS, which spreads the load over multiple name servers, works in the spirit of this mantra.

Improved Security for Primary Servers

In a typical DNS setup, you would add an NS record for your primary name server so that clients can query the primary name server directly. Clients use NS records to find name servers, but primary and secondary servers use other means of communication, so it’s possible to synchronize without any of them being present in an NS record. This means if you only add NS records for secondary servers, clients don’t know about your primary server. You can use the primary server to update your RRs and synchronize them with the secondary servers, while the secondary servers are responsible for resolving client queries.

With this technique, your primary server is hidden from the public, can focus on zone transfers, and is protected from potential attackers.

Leveraging Cloud DNS with On-Premises DNS Servers

Many organizations—especially bigger, more mature ones—already run their own name servers on-premises. Often, these are tightly coupled to the infrastructure with custom scripts and processes that create, update, and remove RRs.

Secondary name servers allow these organizations to keep their existing on-premises server as primary and add secondary name servers that run in the cloud. This way, they can benefit from the cloud and secondary DNS benefits with minimal changes to their on-premises infrastructure.

Conclusion

Your domains are the entry point to your websites and applications, so it’s crucial to ensure that users can always resolve them promptly. Secondary DNS helps you achieve this goal by letting you add extra name servers to your setup that synchronize with each other automatically. Each secondary name server acts like an authoritative name server and can resolve domain names just like your primary server, but without administrative overhead. When deployed smartly around the globe in tandem with technologies like Anycast, secondary DNS even boosts performance by lowering latency and can help to protect your primary servers from attacks.

Gcore’s DNS hosting allows you to set up zone transfers for secondary DNS with the open-source tool OctoDNS, so you get all the mentioned benefits without thinking about global deployments. Check out our docs to learn how to get started!

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.