AI models

Prepare a custom AI model for deployment

This guide covers preparing, containerizing, and deploying AI models. It explains how to package models into containers, push them to a registry, and deploy them for text-based inference with vLLM or image generation with Diffusers.

For custom models, modify the image during the build process.

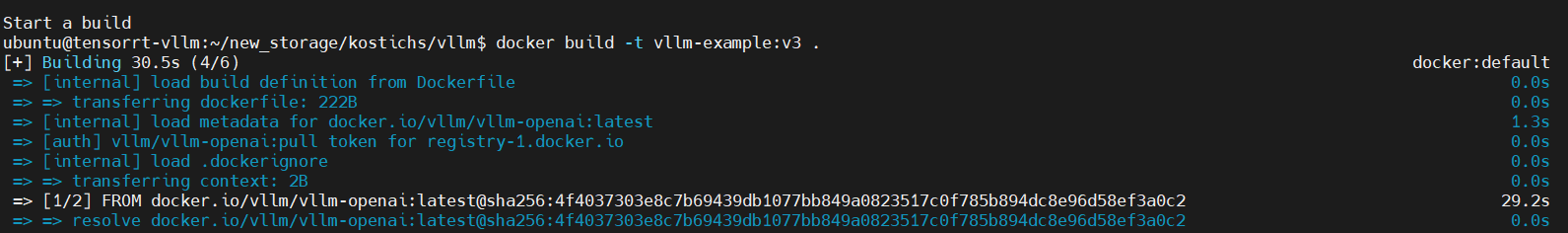

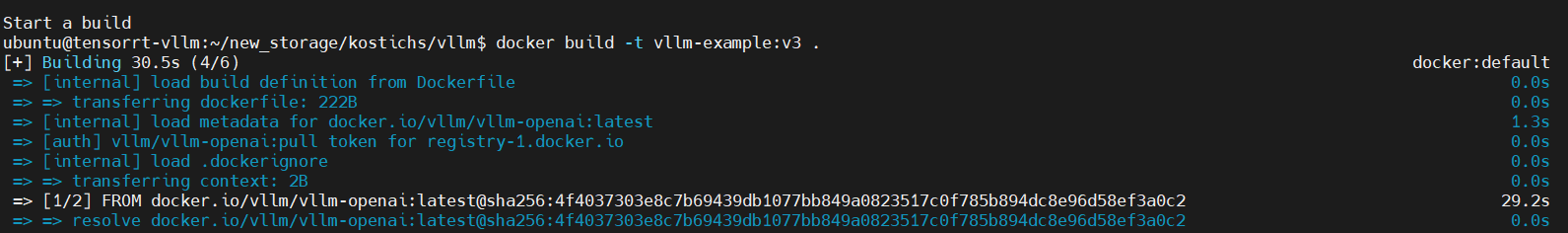

Then, build the container:

If you are building on macOS with Apple Silicon (M chips), use:

This forces the build to use the

If you are building on macOS with Apple Silicon (M chips), use:

This forces the build to use the

This process embeds the model in the container, making it available for inference. You can also use the Gcore Container Registry to store and manage your images for seamless deployment.

This process embeds the model in the container, making it available for inference. You can also use the Gcore Container Registry to store and manage your images for seamless deployment.

These parameters can be combined in a single command. The vLLM documentation provides a full list of configuration options and CLI commands.

At this stage, the container does not yet have inference functionality.

This API allows applications to send HTTP requests for on-demand image generation.

This setup prepares the model and API server to start automatically after deployment.

After deployment, you will receive an endpoint to send requests and receive responses from the model. Additional instructions are included in the inference instance deployment guide.

After deployment, you will receive an endpoint to send requests and receive responses from the model. Additional instructions are included in the inference instance deployment guide.

Train, test, and optimize the model for inference

Before packaging a model into a container, it must be trained, validated, and optimized for inference. This includes training on relevant data, verifying accuracy, and applying optimization techniques such as quantization, pruning, or distillation to reduce computational overhead.Containerization

To deploy an AI model, it must be packaged into a container image that meets industry standards. While there are no strict structural requirements, the image must be compatible with the target registry and deployment environment.vLLM

vLLM is a fast and easy-to-use library for large language model inference and serving, optimized for efficient execution. It features PagedAttention for memory efficiency, continuous batching, and acceleration via CUDA/HIP graphs and FlashAttention. With support for speculative decoding, chunked prefill, and multiple quantization formats (GPTQ, AWQ, INT4, INT8, FP8), vLLM is well-suited for scalable LLM deployment.1. Choose a base image

vLLM integrates with Hugging Face and other model repositories, making it ideal for tasks like chatbots, summarization, and code generation. The latest vLLM images are available in the Docker Hub repository. Use the official vLLM base image that supports the required model architecture for deployment:2. Modify and build the image

To include a specific model from Hugging Face, update the container with the required model and build the image: If you are building on macOS with Apple Silicon (M chips), use:

If you are building on macOS with Apple Silicon (M chips), use:

linux/amd64 architecture, preventing potential compatibility issues when running on x86-64 cloud servers.

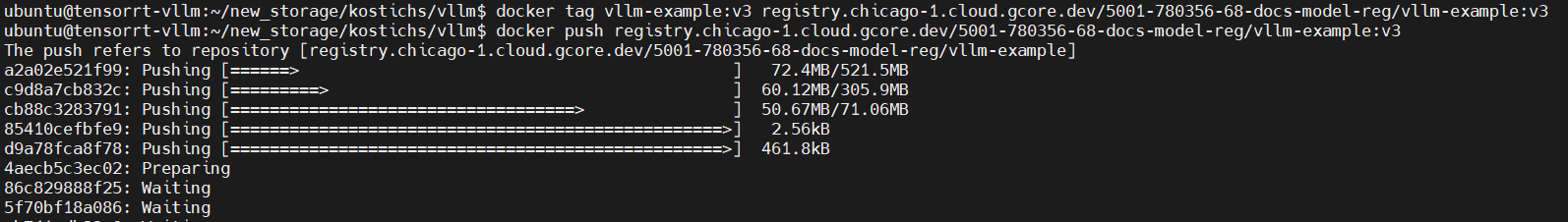

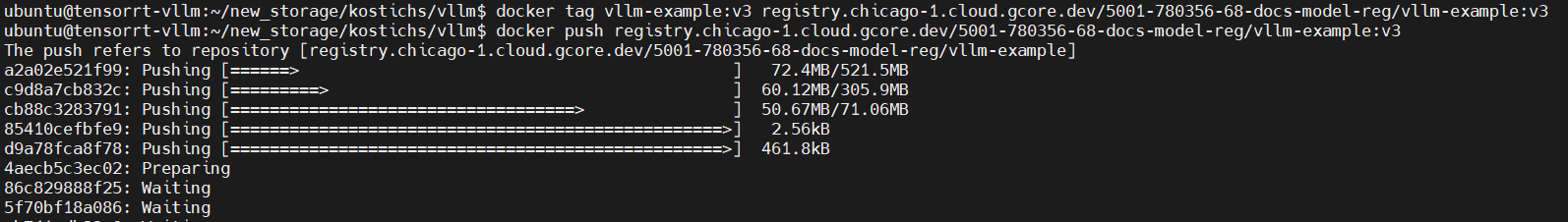

3. Push to registry

After building the image, push it to a registry for deployment: This process embeds the model in the container, making it available for inference. You can also use the Gcore Container Registry to store and manage your images for seamless deployment.

This process embeds the model in the container, making it available for inference. You can also use the Gcore Container Registry to store and manage your images for seamless deployment.

Configuration options

vLLM provides various options to fine-tune performance and behavior. Some notable configurations include:| Paramter | Example | Description |

|---|---|---|

--trust-remote-code | vllm serve state-spaces/mamba-130m-hf --trust-remote-code --host 0.0.0.0 --port 80 | Allows running models that rely on custom Python code from Hugging Face’s Model Hub. By default, this setting is False, meaning vLLM will not execute any remote code unless explicitly allowed. |

--max-model-len | vllm serve state-spaces/mamba-130m-hf --max-model-len 2048 --host 0.0.0.0 --port 80 | Sets the maximum number of tokens the model can process in a single request. This helps control memory usage and ensures proper processing of long texts. |

--tensor-parallel-size | vllm serve state-spaces/mamba-130m-hf --tensor-parallel-size 4 --host 0.0.0.0 --port 80 | Splits the model tensors across multiple GPUs to balance memory usage and allow larger models to fit into available hardware. |

--enable-prefix-caching | vllm serve state-spaces/mamba-130m-hf --enable-prefix-caching --host 0.0.0.0 --port 80 | Improves efficiency by caching repeated prompt prefixes to reduce redundant computations and speed up inference for similar queries. |

--served-model-name | vllm serve state-spaces/mamba-130m-hf --served-model-name custom-model --host 0.0.0.0 --port 80 | Specifies a custom name for the deployed model, making it easier to reference in API requests. |

--enable-reasoning | vllm serve deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --enable-reasoning --reasoning-parser deepseek_r1 --host 0.0.0.0 --port 80 | Enables reasoning mode, allowing models to provide intermediate reasoning steps for better insight into the decision-making process. Requires --reasoning-parser to extract reasoning content from the model output. |

Diffusers

Diffusers is a library for running diffusion-based image generation models. Unlike vLLM, which provides a standardized inference system, it lacks a built-in serving interface, requiring additional code for integration. The environment must include CUDA, cuDNN, and PyTorch for smooth deployment. The models can be retrieved from the Hugging Face model hub.1. Choose a base image

Instead of building from scratch, use official images of the NVIDIA NGC AI Catalog or PyTorch. The following Dockerfile starts with a PyTorch base image and installs Diffusers:2. Implement an inference API

An API must be implemented since diffusers don’t include a built-in inference server. The following script loads a Stable Diffusion model, accepts a text prompt via API, and returns a generated image.3. Create a Dockerfile

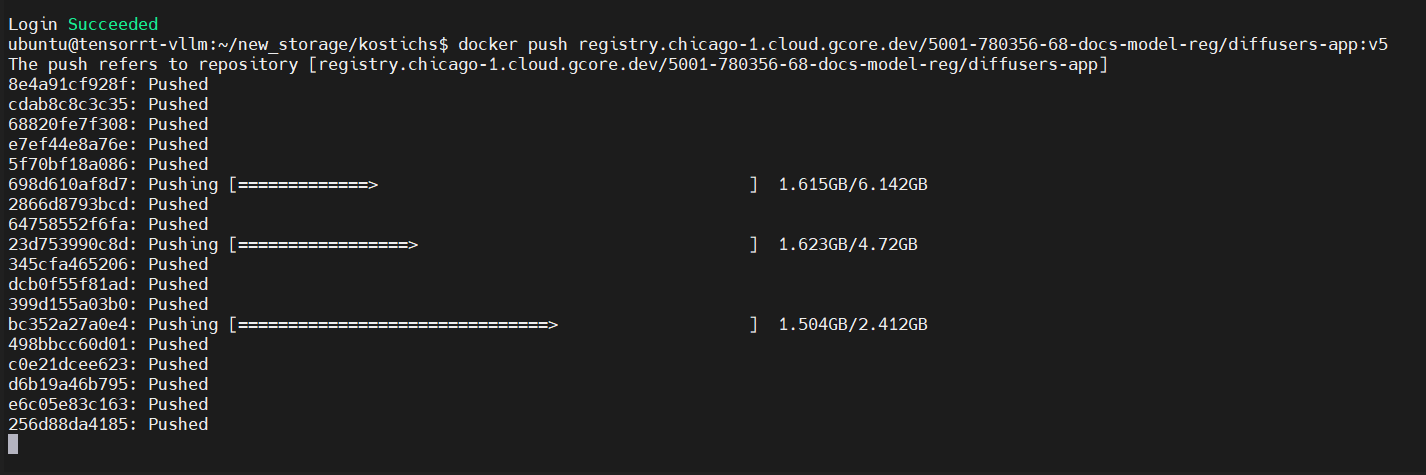

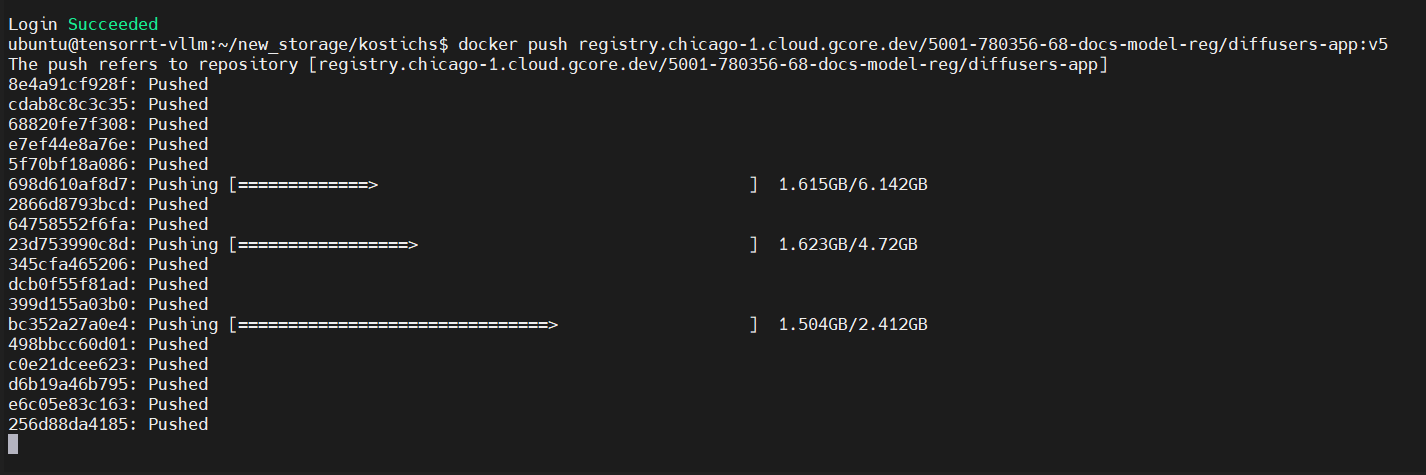

The Dockerfile should include Diffusers and the API service. If authentication is required for specific models, provide the necessary token.4. Push to registry

After building the container, push it to a registry for deployment:

Assign a new tag when updating the container to prevent Kubernetes from using outdated images.

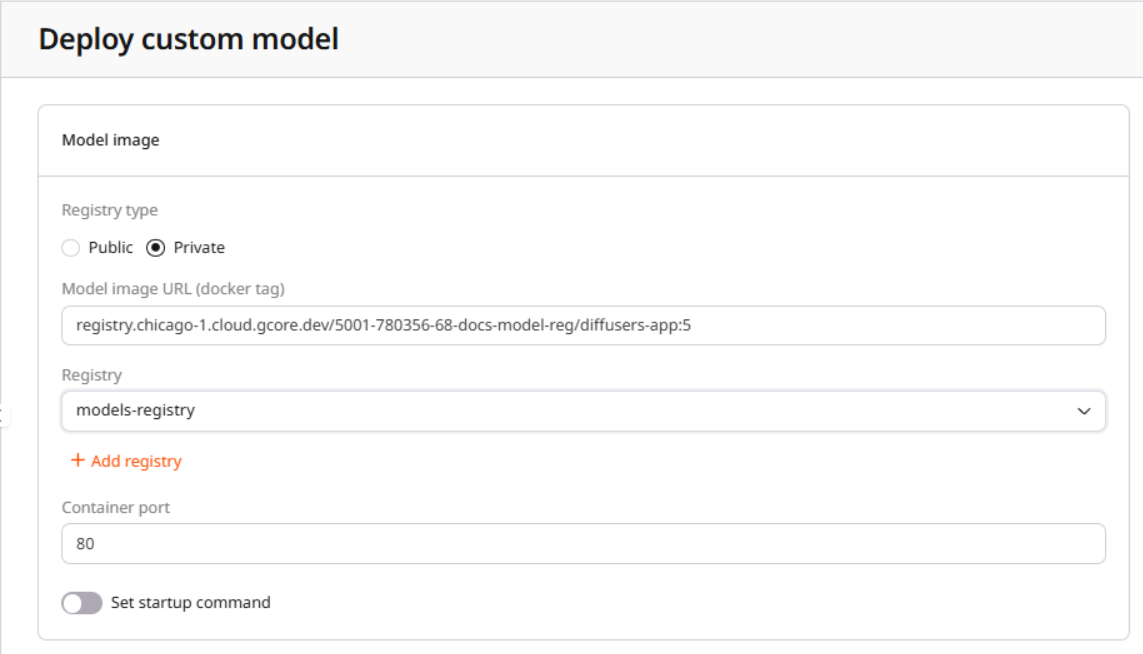

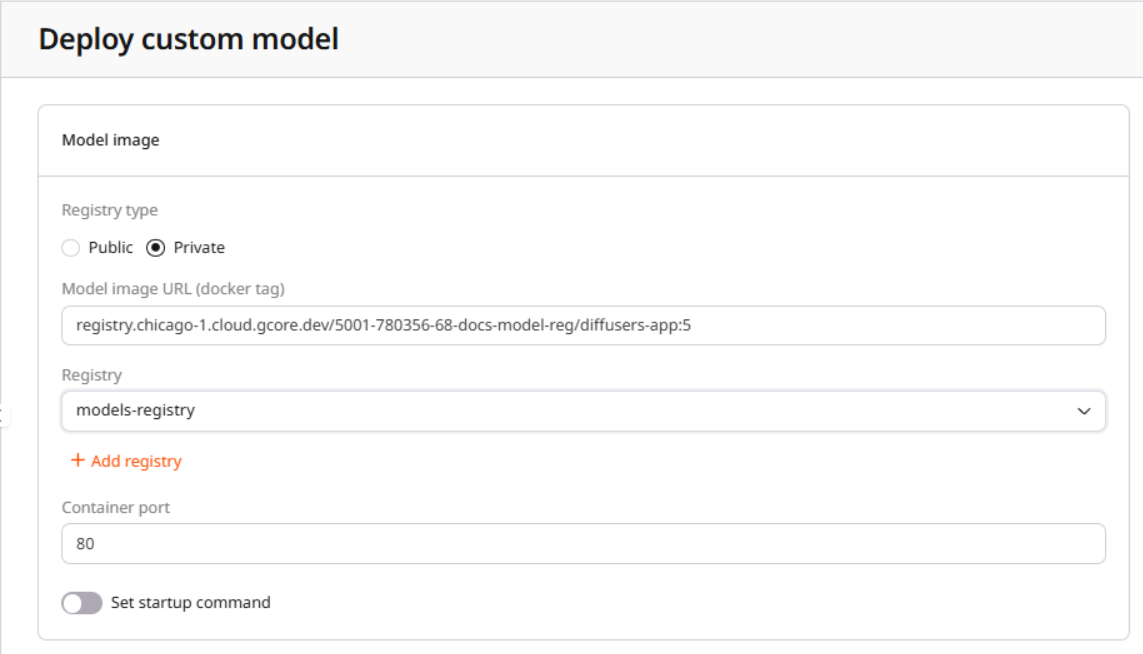

Deployment

After uploading the container to a registry, you can deploy it. During this process, enter the model image URL from the registry. If the registry is public, use the model image URL directly. If the registry is private, provide authentication credentials, including the URL, username, and password. Find more details in the guide on adding a container image registry. After deployment, you will receive an endpoint to send requests and receive responses from the model. Additional instructions are included in the inference instance deployment guide.

After deployment, you will receive an endpoint to send requests and receive responses from the model. Additional instructions are included in the inference instance deployment guide.